Reality-based AI regression harness

Replay tens of thousands of real calls as deterministic conversations with production context so AI-authored handlers prove they still honor contracts, headers, and latency budgets.

Runtime validation for Claude Code, Cursor, Copilot, and MCP agents.

AI coding tools generate code 10x faster—but they've never seen your production traffic. Speedscale captures real request patterns and replays them against AI changes, catching behavioral failures that static analysis can't see.

No credit card required • 5-minute setup • 30-day free trial

FLYR, Sephora, IHG, and platform teams worldwide lean on Speedscale to replay real bookings, loyalty lookups, and commerce flows before merging agent-authored code.

The validation gap

Speedscale drops your live recordings into deterministic sandboxes with production context, so every AI-assisted pull request ships with proof based on repeatable requests and responses. Make replicating real production services as easy as running a test.

Use the comparison below to show stakeholders exactly where the Validation Gap lives in your pipeline.

| Capability | Static analysis | AI self-correction | Speedscale |

|---|---|---|---|

| Understands real production variability | Schemas only. No live traffic. | Guesses from diffs and prompts. | Replays captured conversations byte-for-byte. |

| Confirms downstream contracts | Focuses on syntax and lint. | Relies on the model to self grade. | Validates payload formats, auth, and SLAs. |

| Runs inside CI without staging debt | Yes, but limited coverage. | Needs human babysitting. | Drops recorded traffic into any pipeline while standing up precise replicas of downstream systems with realistic data—without the cost and headaches. |

| Creates audit-ready evidence | Log output at best. | Opaque reasoning. | Produces diff reports and PR-ready reports. |

Surface the exact request that an AI-generated change broke, not just a stack trace.

Share traffic snapshots with MCP agents so they can reproduce defects with production context without downloading prod data.

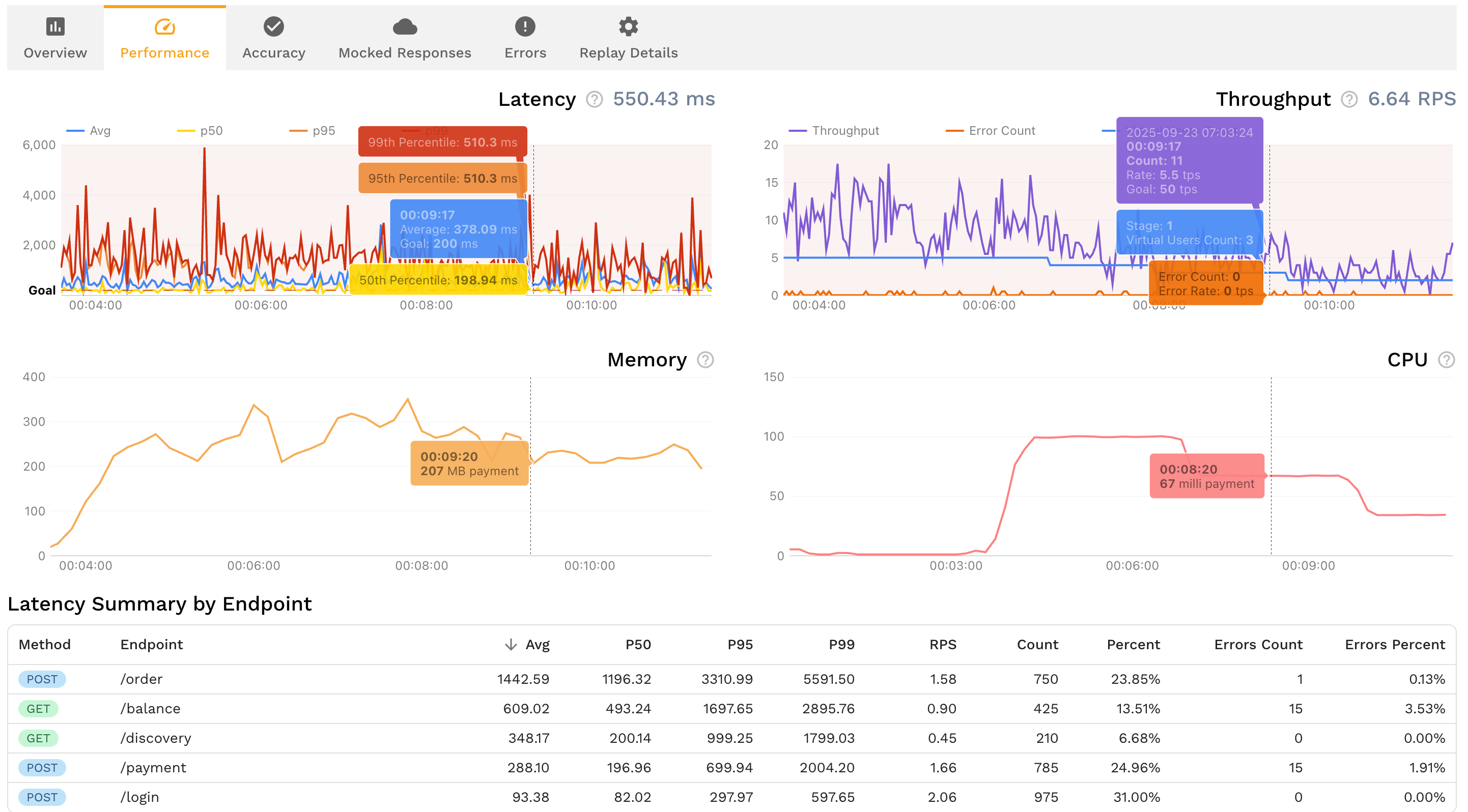

Compare before vs after latency, payloads, and retries as deterministic runs in a single diff report.

Attach validation reports directly to GitHub, GitLab, or Bitbucket pull requests.

Drop Speedscale into your CI run or MCP workflow to prove AI-authored code behaves exactly like production—before customers ever touch it.

Close the Validation Gap across environments, data, and AI-driven release cadences.

Replay tens of thousands of real calls as deterministic conversations with production context so AI-authored handlers prove they still honor contracts, headers, and latency budgets.

Proxy Kubernetes, ECS, desktop, or agent traffic once and share the snapshot with every branch without recreating environments.

See exactly where static tooling stops and runtime validation starts so you can prioritize the riskiest AI diffs first.

Mask sensitive fields automatically while preserving structure so governance teams sign off on replaying production data.

Give Copilot, Cursor, Codex, Antigravity and Claude agents the exact requests and responses they need to triage regressions without guesswork.

Attach machine-readable diffs, severity, and remediation guidance directly to pull requests so reviewers stay unblocked.

Replay live traffic, redacted payloads, and contract diffs before you merge the next AI-assisted pull request.